The previous post demonstrates how we can go about building mpi4py so that we can write and run python programs using MPICH. So once the mpi4py is built and is installed, it has to be tested.

Here, it is assumed that you have a machinefile that stores the IP addresses of all the nodes in the network. This will be used by the MPICH to communicate and send/receive messages between various nodes.

In the extracted folder mpi4py, is another folder named demo. The demo folder has many python programs that can be run to test the working of mpi4py.

Initially a good, testing program is the helloworld.py. The procedure to run it is:

cd ~/mpi4py/demo

mpiexec –np 4 –machinefile ~/mpitest/machinefile python helloworld.py

output:

So if the output looks similar as above and all the nodes have been included, then it works.

Please note that ~/mpi4py/demo is the path to mpi4py on my system and to be replaced with the one in yours. Same is the case with path to the machinefile.

There are other programs in the demo folder that can be used. For example,

There are some benchmark programs created by the Ohio State University. They are :

- osu_bw.py : This program calculates bandwidth where, the master node sends out a series of fixed size messages to other nodes, and the receiver sends a reply only after all the messages is received. So the master node calculates the bandwidth based on the time elapsed and bytes sent by the user.

- osu_bibw.py : This program is similar to the above one but both nodes are involved in sending and receicing a series of messages.

- osu_latency.py : This program when run send mesages to various nodes and waits for a reply from them. This occurs various number of times and the latency is calculated.

These are many other programs in the demo folder that can be tested. All of these programs can be run in a similar way that helloworld.py was run.

Once the testing is done, programs compatible with MPI can be written using python.The way in which MPI programs work is that, all the nodes in the cluster should have the same program. So every processor runs the program but depending on conditions it executes only a part of the program, so this allows parallel executions.

This also means that we can write 2 different programs and give it the same name and share store each of such program on different nodes. and run them. So this can be used to create a server program and store it on the master node and another program can be written as the client program and stored with the same name on worker nodes.

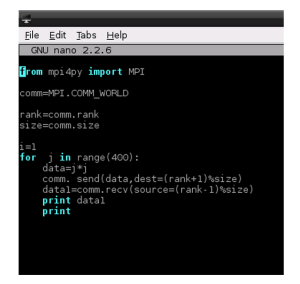

A sample MPI program:

So the above program has a communicator that contains all kinds of methods and process information and its called MPI.COMM_WORLD. Its various features are:

- comm.rank : It gives the rank of the process running on that processor or node.

- comm.size : It provides the number of nodes in the cluster

- comm.get_processor_name() : It gives the name of the processor on which a particular process is running.

- com.send() : is used to send data to a node, indicated by the dest parameter.

- comm.receive() : is used to receive some data from source node received from the node indicated by the source parameter.

These are the basic functions. But many others are present that can be utilised to create a MPI compliant python program.

One thing to note that, if edge conditions are not taken care of and number processes to be used is provided to be greater than the number of nodes then the execution of the program fails. To avoid this the processes can be given a loop around by using the

%size operation as shown in the above example, that would wrap around from the 1st processor to do the task.

One thought on “Python for Pi cluster Part 2: testing mpi4py and running MPI programs with python”